Business Introduction

The Client is a US-based company having its IT operations in India. Their business is to aggregate the delivery service providers into a platform where the Senders, mostly independent B2B Business Owners can find suitable Delivery partners and have their Orders delivered to the Customers seamlessly. The Client has around 50+ tenants in the current year 2022 that are using the platform and the numbers are rapidly growing. They have 1,300+ Stores and 400+ Delivery partners operating. Around 2+ million unique orders were delivered in the current year.

Technical background

The SaaS platform is built using Angular and NodeJS and data are stored in Mongo DB. There is a distinct Mongo DB database created for each tenant. All technical components are hosted in the cloud and access to it is restricted with very strict security policies and protocols.

Business Objectives

-

Monthly / Quarterly business (MBR / QBR) reviews are done both internally by the management team and with the individual tenants by the Customer Success teams. The MBR/QBR reports consist of Metrics related to Sales, Operational efficiency, missed opportunities, data accuracy, customer feedback, etc. There must be a consolidated reporting DB that collates data for all tenants and MBR/QBR reports can be generated automatically anytime, but not once in a month or Quarter.

-

The Power BI report must be embedded into the Client customer portal finally. Each tenant should be shown only their data. But a Customer Service team member from the Client should be able to see all tenants.

Scope of Work

-

Extract Data from each tenant’s Mongo DB instance and load it into a reporting database.

-

Transform, Clean, and Enrich the data before loading it into the reporting database.

-

Create Power BI reports using this consolidated data.

-

Embed the Power BI report in the Customer Portal.

Data Flow Design

-

Python program is scheduled to run at a specific time every day that connects each tenant’s database in a loop and extracts the required datasets into a local folder.

-

Azure Data Factory (ADF) is automatically triggered when the Python extraction is complete.

-

ADF access the downloaded data files, transform and load the final data into Azure SQL DB

-

Business logic to derive the metrics needed in the MBR/QBR is implemented as part of the ADF pipeline.

-

Once the transformed Data is loaded into Azure SQL DB, the Power BI refresh is automatically triggered by ADF as the last step.

Solution Design

Technical Components used

-

Virtual machine (EC2 in AWS) hosts all the development, sandbox, production programs, and Power BI files.

-

Data Extractor Python program runs as part of the Task scheduler in this Virtual machine that extracts data from MongoDB and stores it in CSV format.

-

Azure Data Factory (ADF) loads these CSV files into Azure Database for PostgreSQL database hosted in Azure.

-

Azure Database for PostgreSQL is used to store clean data which is readily consumed by Power BI reports.

-

Power BI is used to visualize the data with slice and dice, drill down, and many more analytical features.

-

Azure Login Apps – for automating the data flow completely, from Python à ADF à SQL à Power BI.

-

Power BI Embedding Capacity (A2) – to publish the report and later embed it as part of their Customer Portal.

-

Power Bi API – used to upscale and downscale the embedding capacity automatically. Also, call Power BI to refresh directly from ADF once the Data transformation is over.

Challenges & Solution

While implementing the solution certain challenges were faced and rectified as follows

-

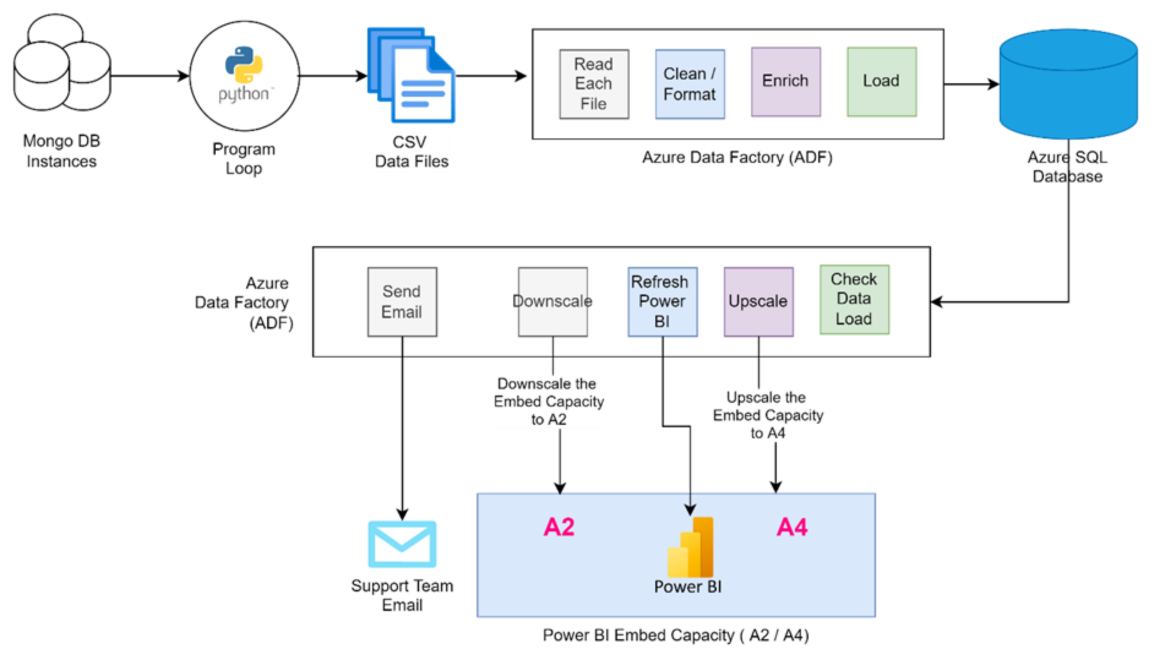

Each tenant’s data is stored in separate Mongo DB databases. But there is no way to extract it all at once. We must loop through each tenant DB to extract separately and then finally consolidate.

-

There is no parameterized connection (linked services) to Mongo DB from ADF. Hence the looping of tenant DB was not possible from ADF. A Python script was developed for this purpose.

-

The consolidated, final data model is more than 3 GB and hence had some performance issues. Had to fine-tune the data model and DAX queries for better performance.

-

The Power BI report was published in an Azure embedded capacity with size A2, which is fine from a performance point of view. However, Daily Refresh failed with insufficient memory. While debugging, we found that it was only loading fine in A4, but the cost was higher than the approved budget. An ADF pipeline was built to automatically upscale the embedding capacity to A4 before running the Data refresh to overcome this. This pipeline monitors the Power BI refresh until it automatically completes and downscales the capacity to A2. This had a marginal increase in the cost; however, the desired state was obtained with complete automation.

Value Addition

-

All the above-stated Business objectives are met in a very efficient way.

-

MBR/QBR reports are available every day to the business teams for better review and decision-making.

-

Reports are embedded as part of the Customer Portal, allowing the tenants to analyze the delivery performance in near-real time.

-

The Client enhanced the customer experience in the portal and monetized the same by giving report access in a subscription-based model.

-

The complete solution is built on Azure Cloud and hence reducing the load on the IT support team to manage the physical systems.

-

The entire data flow is automated end-to-end requiring no manual intervention.

-

The technical Team receives an automated email every day after the data load is completed successfully.

-

The technical team can restart the data flow Pipeline from any point in case it has to be rerun for some reason.